Essential tools for the secure LLM development journey.

In the dynamic landscape of artificial intelligence, the emergence of Large Language Models (LLMs) stands as a significant advancement. These advanced models are trained on extensive text data, that mimics human-like writing, and this had shown to have great potential for various industries, but integrating LLMs into applications requires meticulous attention due to their complexity and interactive nature, demanding precise calibration, constant supervision, and rigorous testing.

To navigate these complexities effectively, specialized tools like LangSmith, NeMo Guardrails, and PromptFlow have been engineered. These solutions are tailored to simplify the intricate journey of LLM development, deployment, and management. They streamline processes, fortify safety controls, and ensure a smooth development cycle, playing an indispensable role in crafting and refining LLM-driven applications. Let’s have a look at them:

LangSmith: Your Unified Platform for LLM Application Development

LangSmith serves as an integrated solution bridging the gap between LLM prototypes and production-ready applications.

It facilitates debugging, testing, monitoring, and evaluation of LLM applications, empowering developers to optimize their applications for production use.

When to Use LangSmith: Debugging, Testing, and Monitoring LangSmith comes into play during the debugging of new chains, agents, or tools. It visualizes the interaction of different LLM components, making it valuable for evaluating prompts and LLMs. LangSmith provides insights into the sequence of calls to LLM, tracks token usage, and aids in debugging latency issues.

Evaluating and Improving LLM Components with LangSmith Developers can leverage LangSmith to understand the sequence of calls to LLM, track token usage, and manage costs. It offers a clear interface to visualize inputs and outputs for each data point, facilitating improvements to the application.

NeMo Guardrails: Ensuring Safety in User-LLM Interaction

As LLMs are increasingly integrated into conversational systems, ensuring safe interactions aligned with organizational policies becomes paramount.

NeMo Guardrails, an open-source toolkit by NVIDIA, provides programmatic control over LLM systems, establishing boundaries in conversations and preventing engagement in unwanted topics.

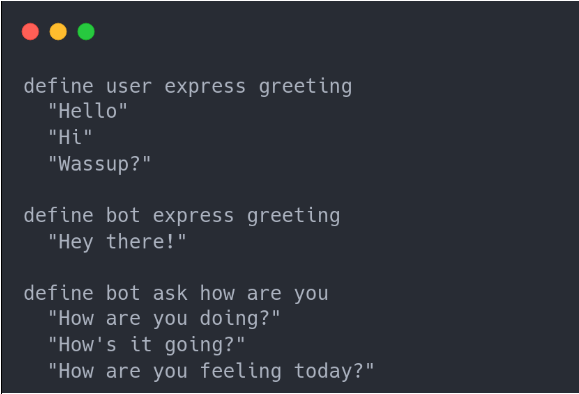

NeMo Guardrails: Providing Essential Validation Scenarios Using the Colang modeling language, NeMo Guardrails allows developers to define Canonical Forms, offering context to LLMs and ensuring intended responses to user inputs. It enforces response quality by specifying dialog flows, achieving deterministic behavior in certain situations.

Fig 1: Three Canonical forms: defining some greeting messages from user, defining some greeting messages for bot and, defining some ask messages to user after greeting.

These forms supply context to the LLM, helping the understanding of user intent and enabling behavior according to specific dialogue flows. This ensures that the LLM responds to user inputs as intended.

In addition to controlling the topics of conversation, NeMo Guardrails also helps ensure the quality of responses generated by LLMs.

It allows developers to specify dialog flows, which are descriptions of how the dialog between the user and the bot should unfold. These flows include sequences of Canonical Forms as well as added logic like branching and context variables.

By specifying dialog flows, developers can achieve deterministic behavior from the bot in certain situations, thereby enforcing specific policies or preventing undesired behaviors.

Fig 2: Two flow definitions: cannot answer about politics and stock market (content rejection).

PromptFlow: Streamlining Your LLM Application Development

Offered by Azure Machine Learning, PromptFlow revolutionizes the development process of LLM-powered applications.

It acts as a comprehensive solution, simplifying the development cycle, promoting team collaboration, and supporting the creation and evaluation of prompt variants.

The Advantages of Using Azure Machine Learning PromptFlow PromptFlow facilitates the creation of executable flows linking LLMs, prompts, and Python tools through a visualized graph. It enhances collaboration, allowing developers to share and iterate flows effortlessly. The tool supports large-scale testing for prompt variants, ensuring the utilization of the most effective prompts.

Tool comparision

| Tool | Open Source | Benchmark Evaluation | Flow Development | Log Tracing | Validation Scenarios |

|---|---|---|---|---|---|

| LangSmith | ✗ | ✓ | ✗ | ✓ | ✗ |

| NeMo Guardrails | ✓ | ✗ | ✓ | ✗ | ✓ |

| PromptFlow | ✓ | ✓ | ✓ | ✓ | ✓ |

Our Conclusion

Harnessing the power of LLMs in application development requires precision and rigorous testing. LangSmith optimizes applications for production, NeMo Guardrails ensures safe user-LLM interactions, and PromptFlow streamlines the development process.

Together, these tools form a comprehensive suite enhancing the security and trustworthiness of LLMs in AI application development.

The integration of innovative tools significantly simplifies the complexities of LLM application development. These tools not only address challenges but also enhance the security and trustworthiness of LLMs, making them indispensable in any successful AI development cycle.

Elevate Your LLM Development with us!

Ready to revolutionize your Large Language Model (LLM) application development?

Elevate your projects with cutting-edge tools, ensuring security, efficiency, and trustworthiness.

Transform your AI applications—start the journey with Kmeleon today!