LLM Tool Review: LangSmith - Streamline Gen AI Development at Scale

What is LangSmith?

LangSmith is a crucial tool developed by LangChain to address the challenges of building, managing, and optimizing applications with intelligent agents and Large Language Models (LLMs). It facilitates the entire lifecycle of an LLM application by providing robust features for debugging, testing, evaluating, and monitoring. With the ability to integrate with any LLM framework, LangSmith offers developers a versatile solution to navigate the complexities of application development, enhancing the reliability and performance of their intelligent agents. This article explores the key features and benefits of LangSmith in more detail.

Core Features and Capabilities

Debugging and Testing

LangSmith's core strength lies in its robust features for debugging, testing, evaluating, and monitoring LLM applications. By offering trace capabilities, developers can follow the data flow through different layers of the LLM, observing how inputs are processed to outputs. This feature is essential for pinpointing where errors may arise in the architecture. Additionally, LangSmith provides evaluation mechanisms to monitor performance against quality benchmarks, which is critical when developing applications that need to adhere to high standards of accuracy and responsiveness. Through these tools, developers can iterate their applications with precision, ensuring that any issues are identified and resolved swiftly, contributing to the stability and reliability of the LLM solutions they build.

Figure 1. The evaluators project traces show the executed runs along with their status and metrics.

Evaluation and Auditing

One of the most valuable aspects of LangSmith is its evaluation and auditing capabilities. The platform provides a granular view of an LLM application's effectiveness through tracing, comprehensive testing datasets, and evaluation modules that include both standardized and custom evaluators. Additionally, human annotation tools allow for nuanced interpretation of results, ensuring a thorough evaluation process. These features enable developers to fine-tune their applications to high standards of performance and reliability.

Figure 2. Summary of evaluation runs in sample project.

Integration with LangChain

Seamless integration with LangChain is a cornerstone of the LangSmith platform, streamlining the development process for LLM-powered applications. This integration ensures that developers can smoothly transition through each stage of the application's lifecycle, from conception to deployment. LangSmith's toolkit supports the scaffolding of new applications, providing resources for testing and iterating on prototypes, and culminating in the refinement of production-ready solutions. By bridging various LLM frameworks and components, LangSmith facilitates a cohesive development environment, where the complexities of LLM integration are significantly reduced.

Figure 3. Snippet running LangSmith evaluator with a LangChain agent.

Deployment and Monitoring

LangSmith excels in facilitating the deployment of LLM applications as REST APIs, and it is set to enhance this process further through integration with LangServe and FastAPI. The platform's monitoring capabilities are designed for developers to actively oversee their applications, tracking performance metrics, and diagnosing operational issues. These capabilities are especially crucial for applications in production environments, where real-time feedback and rapid troubleshooting are paramount. Real-world examples, such as those from Streamlit, Snowflake, and Boston Consulting Group, demonstrate LangSmith's role in optimizing LLM applications, as stated in this announcement from LangChain. These testimonials underscore how LangSmith enables organizations to maintain oversight over their deployments, ensuring that their applications not only perform consistently but also evolve based on ongoing feedback and operational insights.

Figure 4. Monitoring evaluators project in LangSmith portal.

Practical Examples and Use Cases

LangSmith has been adopted by various organizations to enhance the capabilities of LLM applications, refining processes that range from initial development stages to final deployment. Here are some examples illustrating its diverse applications:

Rakuten Group: The Rakuten Group, known for operating a major online shopping mall in Japan and its 70+ businesses across various sectors, incorporated LangChain and LangSmith into their operations. This integration aimed to improve and deliver premium products tailored to their business clients and employee needs. The case study indicates that LangSmith was instrumental in enhancing the e-commerce experience by providing the necessary infrastructure to develop and refine LLM applications for the group's wide range of businesses.

CommandBar: CommandBar, a user assistance platform, collaborated with LangChain to enhance their services. CommandBar helps software companies make their products user-friendly by capturing and predicting user intent, then delivering appropriate assistance. The partnership aimed to develop a Copilot User Assistant that leverages LLM capabilities to improve user interactions with software products. LangSmith’s tools were utilized to refine and optimize the user assistance provided by CommandBar, allowing for a more intuitive and responsive user experience.

Elastic: Elastic, a search analytics company serving over 20,000 customers globally, partnered with LangChain to launch the Elastic AI Assistant. This collaboration aimed to empower organizations to securely utilize search-powered AI, enabling anyone to find the answers they need swiftly and efficiently. LangSmith was likely used here to develop and fine-tune the Elastic AI Assistant, ensuring that it meets high-quality standards and provides a seamless user experience.

More information about LangSmith’s case studies can be found here: https://www.langchain.com/case-studies.

How to Monitor a LangChain Agent with LangSmith

1. Prerequisites

Before diving into LangSmith, you need to create an account and generate an API key on LangSmith's website. It's recommended to familiarize yourself with LangSmith through their documentation.

2. Setting up your environment

To start logging runs to LangSmith, configure your environment variables as follows. This setup tells LangChain to log traces and specify the project and other necessary configurations:

3. Create a LangSmith Client

Create the LangSmith client to begin interacting with the API:

4. Create a LangSmith Component

Next, set up a LangChain component, such as a ReAct-style agent with a search tool, and log runs to LangSmith. This sample agent can use the DuckDuckGo search tool:

You will now see your agent traces in the Projects section of your LangSmith portal:

How to Create and Automatic Evaluation Workflow

The steps guide you through creating a dataset, initializing a new agent for benchmarking, configuring and running evaluations, and exporting the results for further analysis.

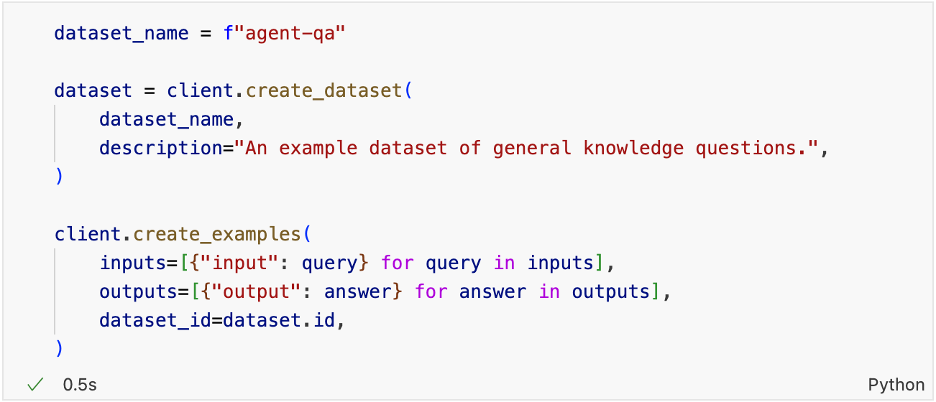

1. Create a LangSmith Dataset

Below, we use the LangSmith client to create a dataset from the input questions from above and a list labels. You will use these later to measure performance for a new agent. A dataset is a collection of examples, which are nothing more than input-output pairs you can use as test cases to your application.

For more information on datasets, including how to create them from CSVs or other files or how to create them in the platform, please refer to the LangSmith documentation (https://docs.smith.langchain.com/).

2. Initialize a new agent to benchmark

LangSmith allows evaluating any LLM, chain, agent, or custom function. To ensure stateful conversational agents don't share memory between dataset runs, use a chain_factory function to initialize a new agent for each call.

3. Configure evaluation

Manually comparing results can be time-consuming. Automated metrics and AI-assisted feedback are essential for efficient evaluation. Configure a custom run evaluator and other pre-implemented evaluators to assess performance.

Below, we will configure some pre-implemented run evaluators that do the following:

- Compare results against ground truth labels.

- Measure semantic (dis)similarity using embedding distance

- Evaluate 'aspects' of the agent's response in a reference-free manner using custom criteria

For a longer discussion of how to select an appropriate evaluator for your use case and how to create your own custom evaluators, please refer to the LangSmith documentation (https://docs.smith.langchain.com/).

4. Run the agent and evaluators

Evaluate your model by running it against the dataset and applying the configured evaluators to generate automated feedback.

Review the test results

The test results and feedback from evaluators are visible in the LangSmith app. You can review and analyze the results to understand the agent's performance and identify areas for improvement.

Conclusion

LangSmith represents a strategic approach to enhancing the reliability, performance, and quality of AI-driven software. As a backend companion for developers, it provides the tools necessary to scrutinize and perfect language models before deployment. Its emphasis on evaluation and traceability ensures applications align with user expectations and industry standards. In the rapidly progressing AI field, LangSmith's contribution lies in giving developers a structured environment to test and iterate on their LLM applications, assisting in translating AI's potential into tangible products.

For those eager to stay at the forefront of AI innovation and ensure their solutions meet the highest standards of excellence, exploring LangSmith is a step in the right direction. To learn more about how LangSmith can transform your AI development journey and for further insights into leveraging generative AI technologies within your organization, visit Kmeleon's website. Discover how our expertise in generative AI can drive your evolution in the ever-evolving tech landscape, ensuring your projects not only thrive but lead in innovation.